In today’s fast-paced software development world, traditional testing approaches no longer meet the demands of rapid delivery and continuous improvement. This comprehensive guide explores how Agile Testing revolutionizes quality assurance by integrating it throughout the development lifecycle, fostering collaboration, and ensuring continuous delivery of high-quality software.

Quick Overview: This guide will equip you with practical knowledge about implementing Agile Testing in your organization, understanding the roles and responsibilities of each team member, and mastering the tools and techniques that make Agile Testing successful. We'll cover everything from basic principles to advanced automation strategies.

What is Agile Testing?

From a QA Engineer’s perspective, Agile Testing represents a shift from traditional testing methodologies. Instead of waiting for development to complete, you’ll be actively involved throughout the development lifecycle, from requirements gathering to production deployment.

Core Responsibilities of a QA Engineer in Agile

Early Involvement

As a QA Engineer, your role begins at the sprint planning phase:

- Review user stories for testability

- Identify potential testing challenges early

- Provide input on test data requirements

- Estimate testing effort for each story

Quality Advocacy

Your key responsibility is to be the quality champion:

- Guide the team on testing best practices

- Identify potential risks and edge cases

- Ensure acceptance criteria are testable

- Promote test automation where beneficial

Continuous Testing

Your testing activities run parallel to development:

- Review code changes as they’re made

- Execute automated tests frequently

- Perform exploratory testing early

- Provide immediate feedback to developers

Agile Testing Cycles and Timelines

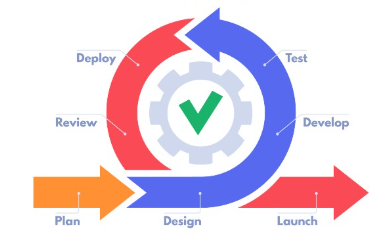

The Software Development Life Cycle (SDLC) in Agile is an iterative process that breaks down the overall project lifecycle into smaller, manageable cycles. While the traditional SDLC follows a linear path (Waterfall), Agile SDLC is cyclical and iterative, with each iteration delivering incremental value through sprints.

Each phase of the SDLC - Planning, Design, Development, Testing, Deployment, and Review - is compressed into these sprint cycles, allowing teams to move through the entire development lifecycle in miniature versions. This approach provides several benefits:

- Faster Feedback: Each sprint cycle completes a full SDLC iteration, providing quick feedback on all aspects of development

- Reduced Risk: Regular deliveries mean issues are caught and addressed early

- Continuous Improvement: The Review phase of each sprint informs the Planning phase of the next

- Incremental Value: Each sprint delivers working software that adds business value

Sprint cycles (whether 1-week, 2-week, or 4-week) represent these complete mini-SDLCs, where teams:

- Plan features and requirements

- Design solutions

- Develop code

- Test thoroughly

- Deploy to production

- Review and adapt

Sprint Cycle Variations

1-Week Sprint Cycle

A tight one-week sprint can be implemented in different ways, depending on your team’s maturity and requirements. Let’s look at two approaches:

Extreme Programming (XP) Style

This high-intensity approach maximizes parallel development and testing:

XP Testing Timeline:

- Monday - Wednesday:

- Rapid development with continuous QA involvement

- PR reviews and testing as features complete

- Bug fixes and verification in real-time

- Wednesday PM - Thursday AM:

- Focused regression testing

- UAT preparation and execution

- Release readiness verification

- Thursday PM - Friday:

- Production deployment

- Post-deployment verification

- Test automation development

- New feature development start

Traditional Scrum Style

A more balanced approach with dedicated phases:

graph TD

A[Monday: Planning] -->|Full Day| B[Tuesday: Development]

B --> C[Wednesday: Development + Testing]

C --> D[Thursday: Testing + Bug Fixes]

D --> E[Friday: Release + Review] Scrum Testing Timeline:

- Monday:

- Sprint planning and story refinement

- Test planning and environment prep

- Review previous sprint metrics

- Tuesday - Wednesday:

- Development with unit testing

- Initial feature testing

- Continuous PR reviews

- Thursday:

- Complete feature testing

- Regression testing

- Bug fixes and verification

- Friday:

- Release preparation

- Deployment and verification

- Sprint review and retrospective

Choosing Your Approach: The XP style works best for mature teams with strong automation and CI/CD practices. The Scrum style provides more structure and is often better for teams transitioning from longer sprints. Both approaches can be effective - choose based on your team's capabilities and business requirements.

2-Week Sprint Cycle

Most common sprint duration, allowing more thorough testing:

graph TD

A[Week 1: Development Phase] --> B[PR Reviews & Testing]

B --> C[Week 2: QA & Regression]

C --> D[UAT & Staging]

D --> E[Production Deploy] QA Activities Timeline:

- Week 1

- Days 1-2: Test planning and environment setup

- Days 3-5: Feature testing as development progresses

- Days 6-7: Initial regression testing

- Week 2

- Days 8-9: Complete feature testing

- Days 10-11: Full regression suite

- Days 12-13: UAT support

- Day 14: Deployment and verification

4-Week Sprint Cycle

Longer cycles suitable for complex features and thorough testing:

Week 1: Planning & Initial Development

- Sprint planning and test strategy development

- Test environment preparation

- Test case creation and review

- Early feature testing

Week 2: Development & Testing

- Continuous feature testing

- Automation script development

- Performance test planning

- Security testing preparation

Week 3: Integration & System Testing

- Integration testing

- System testing

- Performance testing execution

- Security testing execution

Week 4: Stabilization & Release

- Regression testing

- UAT support

- Release preparation

- Production deployment support

CI/CD Integration Points

Continuous Integration Checkpoints

- PR Validation: Automated tests run on every pull request

- Merge Checks: Code coverage and quality gates

- Nightly Builds: Full regression suite execution

Continuous Deployment Stages

- Development: Continuous deployment for feature testing

- Staging: Daily deployments for integration testing

- UAT: Scheduled deployments for user acceptance

- Production: Controlled releases with verification

Release Management Tip: Regardless of sprint duration, maintain a release checklist that includes environment verification, smoke testing, and rollback procedures. This ensures consistent quality across all deployments.

The Agile Testing Process

QA Engineer’s Role in Testing Quadrants

The Agile Testing Quadrants framework helps you organize your testing strategy. Here’s your responsibility in each quadrant:

Q1 - Technology-Facing Tests

Primary Role

- Review unit tests for coverage

- Assist in integration test design

- Maintain test automation framework

Supporting Role

- Collaborate with developers on TDD

- Suggest test scenarios for edge cases

These tests are focused on ensuring that the code works as expected at the unit or component level. They are typically automated and provide immediate feedback to developers.

Q2 - Business-Facing Tests

Primary Role

- Write and maintain acceptance tests

- Design end-to-end test scenarios

- Create test data strategies

Supporting Role

- Review acceptance criteria

- Participate in story refinement

These tests verify that the system behaves as expected from a business perspective. They include functional tests, acceptance tests (such as those written in ATDD or BDD style), and other tests that validate user stories and requirements.

Q3 - Business-Facing Critique

Primary Role

- Plan and execute exploratory testing

- Conduct usability testing sessions

- Document user experience issues

Supporting Role

- Gather user feedback

- Suggest UX improvements

These tests are often performed by QA or through exploratory testing to assess the product's usability, user acceptance, and overall alignment with business needs. They help reveal issues that may not be caught by automated tests alone.

Q4 - Technology-Facing Critique

Primary Role

- Design performance test scenarios

- Execute security testing

- Monitor non-functional requirements

Supporting Role

- Collaborate on performance optimization

- Review security implementations

These tests examine non-functional aspects of the application, such as performance, load, and security. They ensure that the product not only functions correctly but also meets quality and performance standards under various conditions.

QA Focus: Remember that while each quadrant has distinct responsibilities, they're all interconnected. Your role as a QA Engineer is to ensure comprehensive test coverage across all quadrants while maintaining the right balance between automated and manual testing approaches.

QA Activities in Sprint Ceremonies

Sprint Planning

Your responsibilities as a QA Engineer:

1 | QA Sprint Planning Tasks: |

Daily Stand-up

Focus on testing progress and blockers:

1 | QA Daily Updates: |

Sprint Review

Your key responsibilities:

1 | QA Sprint Review Tasks: |

QA Pro Tip: Keep a testing journal during the sprint. Document all testing decisions, challenges, and solutions. This information is invaluable during sprint reviews and retrospectives, and helps in improving the testing process.

Sprint Workflow and Testing Integration

Requirements and Testing Workflow

Before diving into sprint planning, establish a solid requirements and testing foundation:

Requirement Verification Process

graph TD

A[Gather Requirements] --> B[Document in Jira]

B --> C[Define Acceptance Criteria]

C --> D[Stakeholder Review]

D --> E[Refine Based on Feedback]

E --> F[Final Approval]

F --> G[Create Test Cases] Implementation Tracking

Story Breakdown

- Create sub-tasks for development and testing

- Link automated test cases to acceptance criteria

- Track progress using Jira plugins (Zephyr/Xray)

Verification Points

- Daily progress updates in stand-ups

- Regular test execution reports

- Continuous feedback loop with stakeholders

Pro Tip: Use Jira's linking features to create relationships between requirements, test cases, and bugs. This traceability helps track the impact of changes and ensures complete test coverage.

Sprint Planning and Test Strategy

Effective sprint planning integrates testing considerations from the start. Here’s a detailed look at how testing fits into each sprint phase:

Pre-Sprint Planning

- Backlog Refinement

Testers participate in backlog refinement sessions to:

- Identify testability concerns early

- Help define clear acceptance criteria

- Estimate testing effort

- Test Planning Template

1

2

3

4

5

6

7

8

9

10

11

12

13

14Story: User Registration Flow

Test Scope:

Functional:

- Form validation

- Database integration

- Email verification

Non-Functional:

- Performance (< 2s response time)

- Security (password encryption)

- Accessibility (WCAG 2.1)

Resources:

- Test Environment: Staging

- Test Data: Sample user profiles

- Tools: Cypress, JMeter

Automated Testing Strategy

Building a Robust Automation Framework

A successful automation strategy requires careful planning and implementation. Here’s a detailed approach:

Project Structure

1 | automation-framework/ |

Example E2E Test

1 | describe('User Authentication Flow', () => { |

CI/CD Integration

Setting Up Continuous Testing

A robust CI/CD pipeline ensures that tests are run automatically with each code change. Here’s a comprehensive example using GitHub Actions that includes different testing stages and environments:

1 | name: Continuous Testing Pipeline |

Pro Tip: When setting up your CI/CD pipeline, start with the basics (linting and unit tests) and gradually add more sophisticated stages. This approach allows you to identify and fix integration issues early while building a robust testing infrastructure.

Best Practices and Common Pitfalls

Test Data Management

What Is Test Data Management?

- Test Data Management involves creating, maintaining, and using data specifically for testing purposes.

- A robust test data strategy ensures that tests are reliable, reproducible, and isolated (i.e., one test’s data does not interfere with another’s).

- Centralizing test data creation makes tests cleaner and easier to maintain, as it avoids duplication and hard-coded values scattered throughout your test cases.

The Test Data Factory Pattern

A Test Data Factory is a design pattern used to create test data objects in a centralized and controlled manner. This pattern helps in:

- Creating consistent test data: Every time you need a test user or any other test object, you call the factory method.

- Ensuring uniqueness: Dynamic elements (like timestamps) can be used to generate unique data, avoiding conflicts (e.g., duplicate emails in a database).

- Simplifying test maintenance: Changes to test data (like password updates or additional fields) are made in one place rather than across many test files.

Implement a robust test data strategy:

1 | // Test data factory example |

How This Fits in a Typical Agile Scrum Web Application Project

Automated Testing:

In your test suite (whether using Jest, Mocha, or any other testing framework), you can call TestDataFactory.createTestUser() to obtain a fresh test user. This ensures tests don’t interfere with each other by using stale or duplicate data.

Consistency Across Tests:

Since test data is centralized, any changes (e.g., modifying password policies or adding new fields) need to be updated only in the factory, ensuring consistency across all tests.

Integration with Tools like Jira:

While Jira is used for tracking tasks and user stories, having a robust test data strategy ensures that when features are developed (and their test cases are automated), the underlying data is reliably generated. This supports continuous integration and automated regression testing, which are vital in an Agile Scrum environment.

Robust Error Handling for Agile Testing

In Agile, rapid feedback and continuous improvement are key. As QA Engineers, we design our automated tests not only to validate functionality but also to offer detailed insights when issues occur. Comprehensive error handling plays a vital role in our testing strategy by ensuring that failures are informative and actionable. Consider the following example:

1 | try { |

Adaptable Error Handling in Agile Testing

Remember, Agile is a framework—not a one-size-fits-all prescription. You can and should adapt your error handling strategy to meet your business needs. In some cases, it may be preferable for a test not to immediately fail; instead, you might choose to log the error and capture diagnostic information (such as a screenshot) so that the test suite can continue running and capture all issues in one go. Consider the following example:

In this example, if an error occurs, the test will:

1 | - Log the error message to the console. |

1 | import { test, expect } from '@playwright/test'; |

Agile Ceremonies and Testing Integration

Sprint Planning

During sprint planning, testing considerations should be at the forefront of discussions. Here’s how testing integrates with sprint planning:

Testing Considerations in Planning

- Definition of Ready (DoR)

Stories should include:

- Clear acceptance criteria

- Testability requirements

- Test data needs

- Performance criteria

- Test Estimation

Consider time needed for:

- Test case development

- Automation script creation

- Manual testing sessions

- Cross-browser testing

Daily Stand-ups

Testers should actively participate in daily stand-ups, focusing on:

Daily Testing Updates

1 | Daily Testing Updates: |

Sprint Review

Testing plays a crucial role in sprint reviews by:

Quality Metrics Presentation

- Test coverage statistics

- Automated test execution results

- Bug trends and statistics

- Performance test results

Demo Support

- Preparing test data for demos

- Verifying feature stability

- Documenting edge cases

Sprint Retrospective

Testing-focused retrospective topics should include:

Retrospective Testing Focus

1 | Retrospective Testing Focus: |

Best Practice: Maintain a testing-focused mindset throughout all ceremonies. This ensures quality is built into the process rather than being an afterthought. Remember, testing is not just about finding bugs; it's about preventing them through collaboration and early feedback.

Test Management and Execution

QA Engineer’s Test Management Responsibilities

1 | Test Management Areas: |

QA Note: While developers manage their unit test environments, you're responsible for coordinating the broader test environments (integration, staging, UAT). Build strong relationships with DevOps to ensure smooth environment management.

Automation Strategy Implementation

As a QA Engineer, you’ll lead the test automation strategy:

1 | Automation Framework Development: |

Example Test Architecture

Here’s a typical test automation structure you’ll maintain:

1 | test-automation/ |

Bug Management Process

As QA Engineer, you’ll establish the bug management workflow:

graph TD

A[Bug Discovery] -->|You Report| B[Bug Triage]

B -->|You Participate| C[Priority Assignment]

C -->|Dev Team| D[Bug Fix]

D -->|You Verify| E[Regression Testing]

E -->|You Sign Off| F[Closed]

E -->|You Reject| D QA Pro Tip: Create bug report templates that include all necessary information (steps to reproduce, expected vs actual results, environment details, etc.). This speeds up bug resolution and reduces back-and-forth communication.

Test Metrics and Reporting

As QA Engineer, you’re responsible for these key metrics:

1 | Test Metrics to Track: |

Conclusion

As a QA Engineer in an Agile environment, your role extends far beyond just testing. You are a:

- Quality Advocate - championing best practices and standards

- Strategic Partner - contributing to planning and process improvement

- Technical Expert - leading test automation and tools selection

- Team Collaborator - working closely with developers and product owners

- Process Guardian - ensuring quality gates are maintained

Success in your role requires:

- Proactive involvement in all sprint activities

- Strong communication with all team members

- Continuous learning of new testing tools and techniques

- Balance between manual and automated testing

- Data-driven decision making using metrics

Remember that as a QA Engineer, you're not just finding bugs - you're helping build quality into the product from the start. Your early involvement and continuous feedback are crucial to the team's success.

Career Growth Tip: Keep a portfolio of your test strategies, automation frameworks, and quality metrics. Document your contributions to process improvements and team success. This evidence of your impact will be valuable for your career development.